Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Audio / Text to Sign Language Translator using Python

Authors: Dhulipalla Tejaswi, Kalva Yvonne Shiny, Challa Iswarya, Nimmakayala Vasanthi

DOI Link: https://doi.org/10.22214/ijraset.2023.51579

Certificate: View Certificate

Abstract

Deaf people always miss out the fun that a normal person does, may it be communication, playing computer games, attending seminars or video conferences, etc. Communication is the most important difficulty they face with normal people and also every normal person does not know the sign language. The aim of our project is to develop a communication system for the deaf people. It converts the audio message into the sign language. This system takes audio as input, converts this audio recording message into text and displays the relevant Indian Sign Language images or GIFs which are predefined.By using this system, the communication between normal and deaf people gets easier.

Introduction

I. INTRODUCTION

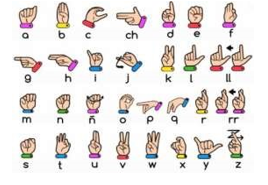

It is said that Sign language is the mother language of deaf people. This includes the combination of hand movements, arms or body and facial expressions. There are 135 types of sign languages all over the world.

Some of them are American Sign Language (ASL), Indian Sign Language (ISL), British Sign Language (BSL), Australian Sign Language and many more. We are using

Indian Sign Language in this project. This system allows the deaf community to enjoy all sort of things that normal people do from daily interaction to accessing the information.

This application takes speech as input, converts it into text and then displays the Indian Sign Language images.

- The front end of the system is designed using EasyGui.

- Speech which is taken as input through microphone uses PyAudio package.

- The speech is recognized using Google Speech API.

- The text is then pre-processed using NLP (Natural Language Processing).

- Finally, Dictionary based machine translation is done.

Sign language is communication language used by the deaf peoples using face, hands or eyes while using vocal tract. Sign language recognizer tool is used for recognizing sign language of deaf and dumb people. Gesture recognition is an important topic due to the fact that segmenting a foreground object from a cluttered background is a challenging problem.

A. Motivation

Communication is one of the basic requirements for survival in society. Deaf and dumb people communicate among themselves using sign language but normal people find it difficult to understand their language. Extensive work has been done on American sign language recognition but Indian sign language differs significantly from American sign language.

ISL uses two hands for communicating (20 out of 26) whereas ASL uses single hand for communicating. Using both hands often lead to obscurity of features due to overlapping of hands. In addition to this, lack of datasets along with variance in sign language with locality has resulted in restrained efforts in ISL gesture detection. Our project aims at taking the basic step in bridging the communication gap between normal people and deaf and dumb people using Indian sign language. Effective extension of this project to words and common expressions may not only make the deaf and dumb people communicate faster and easier with outer world, but also provide a boost in developing autonomous systems for understanding and aiding them this method would help the ease of understanding, and even in public areas the communication is easy

B. Objective

To Understand day-to-day difficulties faced by especially abled people and to find a solution which is

- cost effective

- adaptable by people

- easy to use

- Understanding the requirements needed by the impaired community and finding a solution to them in making a difference.

- To improve the physical and mental wellbeing of the especially abled people and improve their overall quality of life.

C. Existing System

In existing system, Tkinter tool was used which is a standard GUI library in python. In this, input is taken in audio format and based on the input a set of predefined gestures for each letter is displayed on the screen. The predefined database consists ofgestures from A to Z alphabets only.

D. Proposed System

In proposed system, we propose a sign language converter by using Django framework. In this, ASGI (Asynchronous Server Gateway Interface) and WSGI (Web Server Gateway Interface) are standardized methods, that are used for communication between the server and a website. In which, for creating animations Blender tool is used, it is a free and opensource 3D modelling software. And Natural Language Processing Algorithm is used for text processing, tokenizing and stop words removal. In this, we create a animations dataset consists of Digits from 0 to 9, Alphabets from A to Z and most usage words in English language.

II. LITERATURE SURVEY

- M. Xi et al., (2019): In this paper, the authors presents, "End-to-End Residual Neural Network with Data Augmentation for Sign Language Recognition", in which the Residual Neural Network is used to introduce earlier part recognition of American Sign Language (ASL). This study's sign language dataset contains 36 groups of structured sign language phrases, including 0-9 figures and Auxiliary letters A-Z. Via use such tools, we were able to obtain 17640 sign language pictures as model training data.

- K. Saij et al., (2019): In this paper, the authors presents, "WordNet Based Sign Language Machine Translation: from English Voice to ISL Gloss", in which endto-end method is used for converting English voice to Indian Sign Language (ISL) gloss (written form of sign language) is proposed in this paper, which will aid deaf people in communicating with others.

- S. Shrenika et al., (2020): In this paper, the authors presents, "Sign Language Recognition Using Template Matching Technique", in which the image processing work of sign language recognition which first converts the image into grayscale and then into rgb and finally gives the result using template matchingtechnique.

- S. Tornay et al., (2020): In this paper, the authors presents, "Towards Multilingual Sign Language Recognition", in which Modelling of video distribution information including certain hand shapes and gestures is needed for hand gesture recognition. This necessitates a sufficient amount of sign language-specific data. Since the world is generally inadequate, this is a difficult task. Hand shape knowledge can be determined by accumulating resources from various hand techniques to detect phishing URLs. The tests were performed using 4,500 URLs and several classification algorithms. Observational results show that tree classification gives the highest accuracy. Where application is a WSGI application that takes two parameters: environ, which holds information about incoming requests, and start response, which is a callable that returns the HTTP header.

To make ASGI backward compatible with WSGI applications, we need to allow it to run WSGI synchronous applications within an asynchronous coroutine. Additionally, ASGI receives incoming request information via scope, while WSGI accepts the environment. As a result, we must map the environment to scope.

While there are many features one can use to classify whether a website is spam or not, this project aims to use only URLs and limited metadata information for classification. see if the web pages are spam or not. This way, potentially malicious URLs can be de-prioritized during crawling, and these resources can be used to crawl more useful pages that are less likely to be malicious.

B. Algorithms And Techniques

Python: Random Forest algorithm is a powerful and versatile supervised machine learning algorithm and which helps to grows and combines multiple decision trees to create a “forest.” It can be used for both classification and regression problems in R and Python. Random Forest is a classifier that contains several decision trees on various subsets of the given dataset and takes the average to improve the predictive accuracy of that dataset. It is based on the concept of ensemble learning which is a process of combining multiple classifiers to solve a complex problem and improve the performance of the model. Logistic Regression Logistic regression is one of the most popular Machine Learning algorithms, which comes under the Supervised Learning technique. It is used for predicting the categorical dependent variable using a given set of independent variables. Logistic regression predicts the output of a categorical dependent variable. Therefore, the outcome must be a categorical or discrete value. It can be either Yes or No, 0 or 1, true or False, etc. but instead of giving the exact value as 0 and 1, it gives the probabilistic values which lie between 0 and 1.

C. Model Evaluation And Validation

We executed and evaluated our experiments on the models created by SVMlight and Tensor Flow library, one representative of batch learning, and one of the online learning approaches. When we used all features and a training set of size 352 096 URL samples, both achieved accuracy more than 97.3%. For this project, we need to ensure that our models can detect as many of the malicious websites as possible, even at the cost of predicting that some good websites are malicious. In fact, declaring a malicious website harmless is very expensive, while declaring a harmless website malicious is not that expensive.

IV. RESULTS AND ANALYSIS

Audio input is taken using python PyAudio module.

Conversion of audio to text using microphone Dependency parser is used for analysing grammar of the sentence and obtaining relationship between words. Speech recognition using Google Speech API. Text Preprocessing using NLP. Dictionary based Machine Translation. ISL Generator: ISL of input sentence using ISL grammar rules. Generation of Sign language with signing Avatar.

Output generation Output for a given English text is produced by generating its equivalent sign language depiction. The output of this system will be a clip of ISL words. The predefined database will be having video for each and every separate words and the output video will be a merged video of such words.

As we know that Machine can only understand binary language (i.e.0 and 1) then how can it understand our language. So, to make the machine understand human language NLP was introduced. Natural Language Processing is the ability of the machine where it processes the text said and structures it. It understands the meaning of the words said and accordingly produces the output. Text preprocessing consists of three things- Tokenization, Normalization and Noise removal as shown in Fig.6. Natural Language processing which is the mixture of artificial intelligence and computational linguistics. But actually how it works with our project is most important. NLP can do additional functions to our language. We will get our information after giving audio input based on the NLP devices to understand human language. For example, Cortana and Siri. It is not an easy task for the machine to understand our language but with the help of NLP, it becomes possible. Actually how it works is shown below: We give audio as input to the machine. The machine records that audio input.

Then machine translates the audio into text and displays it on the screen.

The NLP system parses the text into components; understand the context of the conversation and the intention of the person.

The machine decides which command to be executed, based on the results of NLP.

Actually NLP is process of creating algorithm that translates text into word labelling them based on the position and function of the words in the sentences. Human language is converted meaningfully into a numerical form. This allows computers to understand the nuances implicitly encoded into our language. 5) Dictionary based machine translation is done finally.

When you speak “How Are You” as input into the microphone, the following output pops up as separateetters-

V. FUTURE WORK

The future work is to modify the Web Page for sign language converter which can be improved and new functionalities can be added. Various front-end options are available such as .net or android app, that can be used to make the system cross platform and increase the availability of the system. Although it is well recognized that facial expressions communicate an essential part of sign language, this project did not focus on them. We are excited to continue the project by incorporating facial expressions into the system.

Conclusion

Sign language converter is very useful in various areas. We conclude that Audio or Text to sign language converter for hearing impaired people is developed without any faults. A sign language converter comes in handy in a variety of situations. Anyone should use this system to learn and communicate in sign language in schools, colleges, hospitals, universities, airports, and courts. It facilitates communication between those who have normal hearing and those who have difficulty hearing and those who have difficulty in talking.

References

[1] S. Shrenika and M. Madhu Bala, \"Sign Language Recognition Using Template Matching Technique,\" 2020 International Conference on Computer Science, Engineering and Applications (ICCSEA), Gunupur, India, 2020, pp. 1-5, doi: 10.1109/ICCSEA49143.2020.9132899. [2] Ankita Harkude#1, Sarika Namade#2, Shefali Patil#3, Anita Morey #4, Department of Information Technology, Usha Mittal Institute of Technology, Audio to Sign Language Translation for Deaf People, (IJEIT) Volume 9, Issue 10, April 2020. [3] M. Sanzidul Islam, N. A. Jessan, A. Shahariar Azad Rabbi and S. Akhter Hossain, \"Ishara-Lipi: The First Complete Multipurpose Open Access Dataset of Isolated Characters for Bangla Sign Language,\" 2018 International Conference on Bangla Speech and Language Processing (ICBSLP), Sylhet, Bangladesh, 2018, pp. 1-4, doi: 10.1109/ICBSLP.2018.8554466. [4] S. Tornay, M. Razavi and M. Magimai.Doss, \"Towards Multilingual Sign Language Recognition,\" ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 2020, pp. 6309-6313, doi: 10.1109/ICASSP40776.2020.9054631. [5] M. Xie and X. Ma, \"End-to-End Residual Neural Network with Data Augmentation for Sign Language Recognition,\" 2019 IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chengdu, China, 2019, pp. 1629-1633, doi: 10.1109/IAEAC47372.2019.8998073. [6] S. He, \"Research of a Sign Language Translation System Based on Deep Learning,\" 2019 International Conference on Artificial Intelligence and Advanced Manufacturing (AIAM), Dublin, Ireland, 2019, pp. 392-396,doi:10.1109/AIA M48774.2019.0 0083. [7] K. Saija, S. Sangeetha and V. Shah, \"WordNet Based Sign Language Machine Translation: from English Voice to ISL Gloss,\" 2019 IEEE 16th India Council International Conference (INDICON), Rajkot, India, 2019, pp. 1-4, doi: 10.1109/INDICON47234.2019.9029074. [8] H. Muthu Mariappan and V. Gomathi, \"Real-Time Recognition of Indian Sign Language,\" 2019 International Conference on Computational Intelligence in Data Science (ICCIDS), Chennai, India, 2019, pp. 1-6, doi: 10.1109/ICCIDS.2019.8862125. [9] D. Pahuja and S. Jain, \"Recognition of Sign Language Symbols using Templates,\" 2020 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 2020, pp. 1157-1160, doi: 10.1109/ICRITO48877.2020.9198001. [10] F. M. Shipman and C. D. D. Monteiro, \"Crawling and Classification Strategies for Generating a Multi-Language Corpus of Sign Language Video,\" 2019 ACM/IEEE Joint Conference on Digital Libraries (JCDL), Champaign, IL, USA, 2019, pp. 97-106, doi: 10.1109/JCDL.2019.00023.

Copyright

Copyright © 2023 Dhulipalla Tejaswi, Kalva Yvonne Shiny, Challa Iswarya, Nimmakayala Vasanthi. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET51579

Publish Date : 2023-05-04

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online